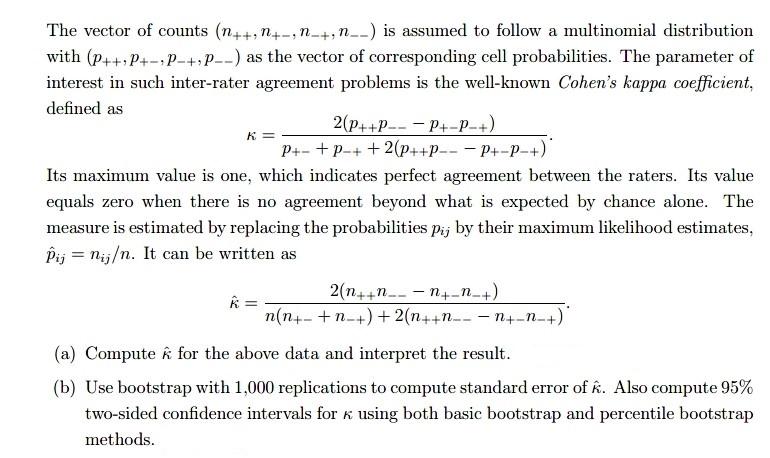

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

Results for SISA dataset. Accuracy (blue), Cohen's kappa (red), and AUC... | Download Scientific Diagram

INTER-RATER RELIABILITY OF ACTUAL TAGGED EMOTION CATEGORIES VALIDATION USING COHEN'S KAPPA COEFFICIENT

Measuring Agreement with Cohen's Kappa Statistic | Science gadgets, Classroom displays, Third grade science

Inter-Rater: Software for analysis of inter-rater reliability by permutating pairs of multiple users

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

![SOLVED: Cohens Kappa [R] Two pathologist diagnose (independent from each other) 118 medical images concerning cervical cancer. They diagnose it concerning the categories: Negative (2) atypical change (3) local carcinoma (4) invasive SOLVED: Cohens Kappa [R] Two pathologist diagnose (independent from each other) 118 medical images concerning cervical cancer. They diagnose it concerning the categories: Negative (2) atypical change (3) local carcinoma (4) invasive](https://cdn.numerade.com/ask_images/b9ecfcd5f661419abd51bff7ead3edd5.jpg)

![Fleiss' Kappa and Inter rater agreement interpretation [24] | Download Table Fleiss' Kappa and Inter rater agreement interpretation [24] | Download Table](https://www.researchgate.net/publication/281652142/figure/tbl3/AS:613853020819479@1523365373663/Fleiss-Kappa-and-Inter-rater-agreement-interpretation-24.png)

![Fleiss' Kappa and Inter rater agreement interpretation [24] | Download Table Fleiss' Kappa and Inter rater agreement interpretation [24] | Download Table](https://www.researchgate.net/profile/Vijay-Sarthy-Sreedhara/publication/281652142/figure/tbl3/AS:613853020819479@1523365373663/Fleiss-Kappa-and-Inter-rater-agreement-interpretation-24_Q320.jpg)